When I support a school, I’m obsessed with two things -

research-based best bets,

and the school context.

I know that what will make most difference to student progress and the lives of teachers is school culture, which I’m going to define as anything which makes students working hard, or very hard, the cultural norm.

I don’t advise and train school leaders on cultural change. By and large, the culture of a school represents the values of the leaders, and more specifically the head. Our values are deeply connected to our emotions. I can’t change anyone’s values, and changing their emotions - well, welcome to therapy. That’s not me.

In that sense, school improvement is hard.

But, when we look at it in terms of teaching strategies and the curriculum, no. School improvement is easy. We just make it hard, unwittingly scuppered by our best intentions.

I’m going to demonstrate this by offering a case study. Imagine Durrington High School signed me up as their SIP.

They are a research school, and I subscribe to their excellent blog, Class Teaching. This will be my source material for this role play of a case study.

Teaching and Learning

So imagine I’m meeting with Chris Runeckles Director of Durrington Reseach School - Disciplined inquiry for teachers

He tells me about their very well planned, self directed CPD, where teachers try to answer a research question of their own devising.

“Teachers focus on an aspect of teaching that they are looking to improve,

this year represents the sixth time that our teachers have spent the early autumn term writing what we term a Teacher Inquiry Question,

which they will pursue for the rest of the academic year.

how they can use the available evidence from research to address this in their classrooms

and then how they will know if it’s making a difference.”

Here are some questions I would ask:

SIP Questions

How do teachers decide what they most need to improve?

What is the rationale for having only one focus?

What is the rationale for having that single focus for a whole year, rather than for two half terms?

What has been the impact over the last 6 years on Progress 8?

The Impact

Then we would look at the available data to assess that impact. I’ll do my best from that which is publicly available (Chris would obviously have more precise data, and that for 2024)

2023: P8 of 0.15

2022: P8 of 0.42

2019: P8 of 0.22

SIP Questions

What does the data tell us about the impact of this 6 year approach to teachers’ inquiry?

If the teachers did improve the progress of their classes, what other factors are at play which meant Progress 8 as a whole did not improve?

How have you measured these other negative factors?

What other causes might there be for this flat long term trend?

It is highly likely that many factors will be external. We can only measure these by proxy. I use the FFT Schools Like Yours website which finds the 50 schools nationally with the most similar cohorts to yours.

This tells us that Durrington is ranked 26th out of the 51 schools - so exactly average.

These are the top 10 similar schools in 2023.

SIP Questions

Do we know of any specific local factors which will be affecting your students much more than other schools with similar cohorts?

What evidence do you have for those factors?

Who should visit any of these schools to find out what might be making the difference? How can we find out which ones are worth visiting?

Averages are Limited

An intervention may have a small impact, or no impact when we measure it. That does not mean it was ineffective. For some it might have been brilliantly successful, while the poor performance of other outliers can bring the average down to 0.

Instead we want to find out if there are conditions in which it worked well.

SIP Questions

Are there teachers whose inquiry led to measurable improvement in progress?

Are there departments which were organised in a way which led to most of their teachers achieving a measurable improvement in progress?

Let’s look at the Progress 8 of classes to see if we can find teachers and departments who are succeeding.

Performance Tables

We need to search the available data - I have to use the publicly available, but it is only for 2023.

SIP Questions

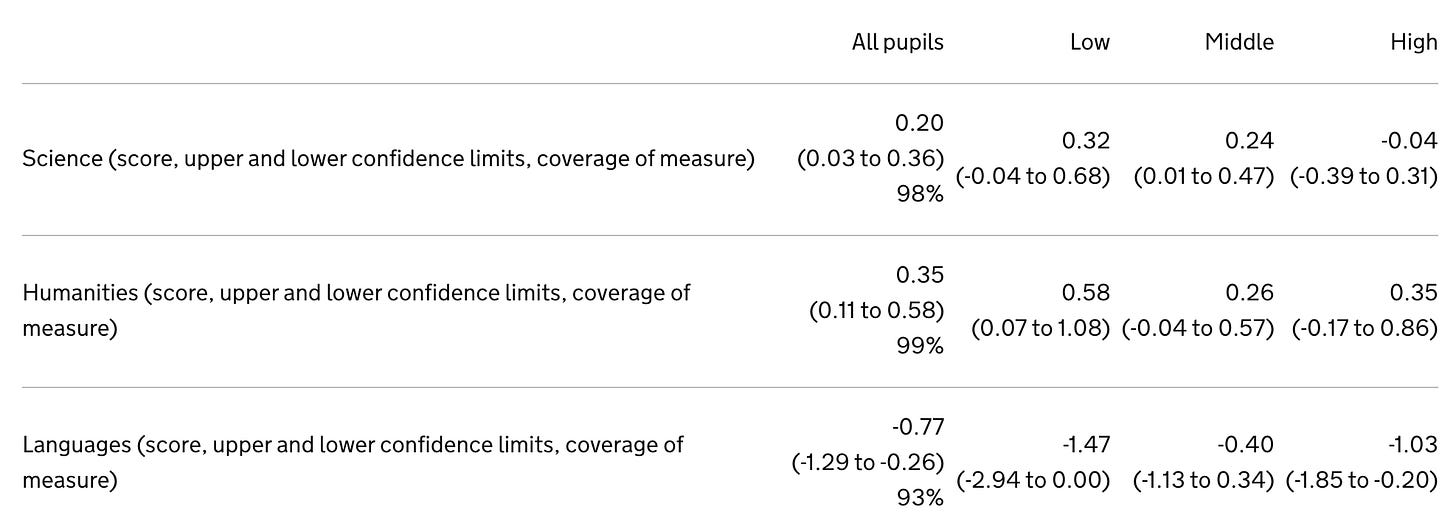

Let’s group the teacher inquiry projects by department. What do we notice about humanities, science and maths?

When we look at their inquiry questions, can we rank them with a gut instinct. This is our measure: if the teacher did this with all classes, all the time, how many extra GCSE grades would they get in their class?

Which inquiries did we think would have the most impact, and how does that map against their class and department results?

Having done this, which inquiries look the most promising for developing across the school?

Which inquiries appear to have no impact when compared to the class and department results?

Let’s group them. What do they have in common, and what does this suggest is a poor inquiry question?

What if the difference is not the choice of the inquiry question, but the way the teacher followed up measuring the impact and adapting as they went?

Could we interview the teachers who seem to have an impact to see how they approached it, to see whether there are any common features we could use to improve how teachers monitor and develop their inquiry?

Humanities have a stand out confidence interval and progress - what did they do?

Can we interview the humanities team to find out if they did anything which they think had an even higher impact than their inquiry questions?

When we look at the pattern of department success, do we get a feeling that this is being driven by the quality of teacher inquiry over the last 6 years, or are there more important factors?

What are these factors - if any - and how might we prioritise these?

Having looked at the evidence for successful inquiry questions which clearly led to progress can we establish a checklist which will help all teachers:

Select an excellent question

Monitor the impact during each term so that it leads to long term impact

Implementation

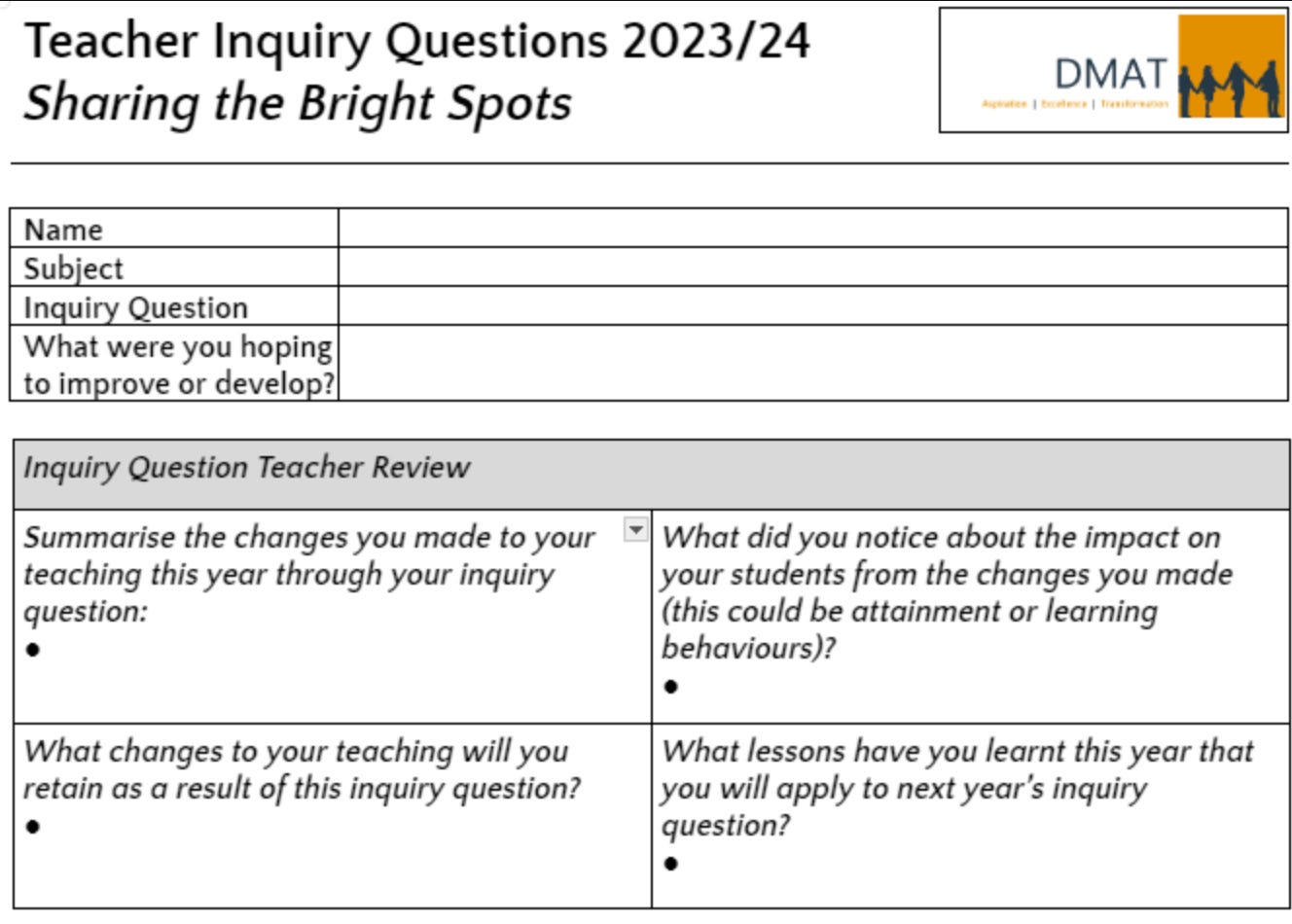

Next, Chris tells me how they implement teacher inquiry across the school. Here is a simple pro-forma teachers fill in:

SIP Questions

You’ve focused on attainment or learning behaviours. Would progress be a better measure than both?

Would a row asking teachers who they measured impact be useful in them having a clearer picture of their impact?

Would there be real advantabes to a row asking teacher to outline how they will measure the impact of the next inquiry?

Did our analysis of each department above suggest that grouping these by department might lead to more progress?

Should we ask teachers not just what they will retain, but how they will share this so that the team consider adopting it?

Do we need to make heads of department oversee the results of these inquiries and report back on what the whole team will do differently next year based on their experiments last year?

Department Inquiries

Next Chris outlines how the inquiry questions have to alight with the school improvement plan.

The inquiry questions are therefore set through conversation between the curriculum leader and the teacher.

This ensures they connect directly to the DIP, which, as described, sets the direction for all activity so that our precious time is directed at a narrow set of shared priorities.

My experience, and the evidence, suggests that only a narrowed and collaborative approach results in lasting behaviour change.

SIP Questions

If the teacher inquiry has to be selected because research and/or gut instinct suggests it will have a significant impact, does it need to align with the Department Improvement Plan?

If you have an average or unsuccessful department, is the leader likely to choose the best inquiry questions?

If the department inquiry questions have to align with the School Improvement Plan, what evidence do we have that leaders choose the best priorities to develop? (See the school Progress 8 figures over time).

How will we discover what we don’t know if we control what the inquiry questions can be?

Can we actually solve all of these issues by simply showing teams how to choose high impact questions and approaches to implementing them?

If we can’t, how can you design this year’s inquiries so that we can guide departments and teachers more effectively next year?

Teacher Inquiry Question

SIP Questions

If we measured the impact of vocabulary teaching every 10 weeks, would we be able to adapt how we taught it to lead to better outcomes by then end of year assessment?

Is disadvantage the right focus for such a small cohort - it will give us unreliable data. Is disadvantage one of the main areas the school needs to improve?

(This does need to improve, but is also pretty much the national average).

If live marking is going to have an impact, won’t we get a much better measurement of impact by measuring the whole class, rather than a small cohort who will have too broad a confidence interval?

If students are live marked at random, aren’t they more likely to try hard, than if they know they will only be selected in a particular lesson? Is selecting by row counter productive to all students working hard?

What evidence does this teacher have to suggest that ‘securing attention’ is the main thing in their teaching which will have most impact? If it is going to have most impact, shouldn’t they also measure it with KS4 where the impact has more consequences, and the measurement is more reliable?

The presentation question is highly specific, with lots of whole school, as well as department implications. Shouldn’t the measure be progress in the maths mocks, rather than the quality of writing? In other words, who cares if the writing improves significantly but the progress doesn’t? (Sure, we care - but there will be some other inquiry which would potentially lead to greater progress).

This model of testing the inquiry after a term looks like it will allow this teacher to improve it, or answer the question and choose a better inquiry. Could we ask our teachers with historically high impact inquiries whether waiting till the end of the school year to measure impact should be changed?

Are teachers and heads of department picking the most high impact inquiries? To answer that, we need to look at the data. Once again, the performance tables:

This looks like a pattern of teachers teaching to the middle, raising the progress of low attainers, but reducing the progress of high prior attaining students. Which departments would benefit most from a focus on teaching to the top?

Would the heads of department be the people best placed to inquire into teaching to the top?

Support and Challenge

Schools I work with closely have called me a ‘Support and Challenge Partner’. I really like that as a model of what I do.

Although the challenge is, well, challenging, it is supportive, because:

It is always aimed at helping you improve progress

I have no agenda I’m pushing from my own bias or experience - we just look at the data.

P.S. Schools I support don’t ever get named in a post. Although this post does name Durrington, I have only used information which is already in the public domain, and which I acknowledge may be out of date, as the 2024 data may show a different story.

Finally, and counter intuitively, once we have improved culture, improving teaching does not lead to most progress. The biggest impact comes from the curriculum.

Brilliant - this is exactly how to look at things in my view. The numbers tell us what we need to know about the impact of pedagogy. If a school has a great culture but student progress stagnates or reduces then isn’t it clear that the curriculum and lesson delivery model should be looked at? Well said Dom!